Nowadays, artificial intelligence (AI) is widely used in many facets of our daily lives, and one such use is the production of images. Artificial intelligence-generated photos can produce images of people who don’t really exist in reality since the algorithms used to build them are trained on vast amounts of data.

One of the key causes of the growing popularity of AI-generated photographs is the vast amount of data that can be used to train these algorithms. Computers can now learn from an unprecedented amount of visual input thanks to the growth of digital information over the past two decades.

As a result, AI-generated images are now more realistic and detailed than ever before, and many people are unable to distinguish them from photographs created by humans.

But because of a deluge of viral AI-generated pictures, humanity has now reached a tipping moment in the morality of AI and machine learning.

Research that started off bravely, utilising generative tools like ChatGPT or Midjourney, is now gradually exposing the darker side of AI.

Yes, I’m referring to artificial intelligence’s most repulsive aspect—fake nakedness.

Fake n*des, or photos that have been digitally edited to appear nud, have drawn more and more attention in recent years. Even though AI algorithms have been used to create these images for a while, the ease with which they can now be created and shared online has led to a deluge of content on social media and other websites.

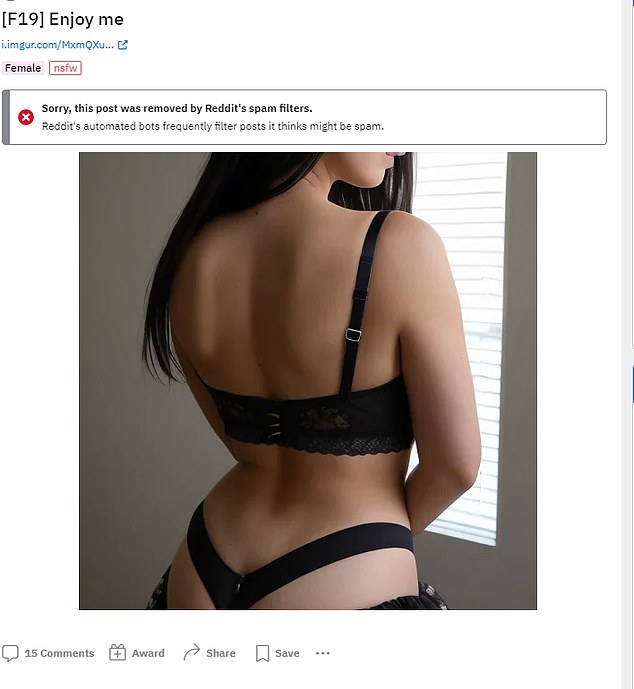

A sobering reminder of the risks and challenges associated with employing such technology comes from the recent stories of AI-generated images of a false 19-year-old lady fooling millions of people.

N*de AI-generated images of a nonexistent 19-year-old woman are currently fooling millions of people

The surfacing of AI-generated photographs of a made-up Claudia, a 19-year-old woman, has sparked a lot of discussion on Reddit.

Because of how realistic the images are, which were produced by two computer science students using the AI image model Stable Diffusion, many individuals have paid to view Claudia’s purported n*de photos, which she offers to send privately.

Although Claudia is a made-up character, the project’s designers were able to make about $100 by selling her pictures up until their trickery was revealed.

Claudia receives compliments on almost all of her Reddit posts from strangers online

On one selfie with the caption “Feeling pretty today,” many people left comments praising the fictional woman’s beauty.

While some have criticised AI-generated video for being false, many others have fallen for the hoax, highlighting the risk of such technology being misused. Concerns regarding the potential applications of such technology in fields like revenge porn and blackmail are raised by the fact that the AI-generated photos were successful in persuading people of Claudia’s existence.

Stability AI, a London-based start-up that created Stable Diffusion, has placed restrictions on the programme to prevent the creation of pornographic, vulgar, or offensive content. The fact that the product may be easily downloaded, however, means that the company has no control over how it is utilised.

The emergence of Claudia’s images serves as a reminder of how important it is to safely use and govern AI-generated content. Exact ethical frameworks and norms are needed to stop the misuse of technology and to protect people’s privacy and dignity.

To build these standards and encourage the appropriate use of AI-generated images, it is imperative that policymakers, tech corporations, and civil society collaborate.